This week, we released (most of) the final set of papers from the Planck collaboration — the long-awaited Planck 2018 results (which were originally meant to be the “Planck 2016 results”, but everything takes longer than you hope…), available on the ESA website as well as the arXiv. More importantly for many astrophysicists and cosmologists, the final public release of Planck data is also available.

Anyway, we aren’t quite finished: those of you up on your roman numerals will notice that there are only 9 papers but the last one is “XII” — the rest of the papers will come out over the coming months. So it’s not the end, but at least it’s the beginning of the end.

And it’s been a long time coming. I attended my first Planck-related meeting in 2000 or so (and plenty of people had been working on the projects that would become Planck for a half-decade by that point). For the last year or more, the number of people working on Planck has dwindled as grant money has dried up (most of the scientists now analysing the data are doing so without direct funding for the work).

(I won’t rehash the scientific and technical background to the Planck Satellite and the cosmic microwave background (CMB), which I’ve been writing about for most of the lifetime of this blog.)

Planck 2018: the science

So, in the language of the title of the first paper in the series, what is the legacy of Planck? The state of our science is strong. For the first time, we present full results from both the temperature of the CMB and its polarization. Unfortunately, we don’t actually use all the data available to us — on the largest angular scales, Planck’s results remain contaminated by astrophysical foregrounds and unknown “systematic” errors. This is especially true of our measurements of the polarization of the CMB, unfortunately, which is probably Planck’s most significant limitation.

The remaining data are an excellent match for what is becoming the standard model of cosmology: ΛCDM, or “Lambda-Cold Dark Matter”, which is dominated, first, by a component which makes the Universe accelerate in its expansion (Λ, Greek Lambda), usually thought to be Einstein’s cosmological constant; and secondarily by an invisible component that seems to interact only by gravity (CDM, or “cold dark matter”). We have tested for more exotic versions of both of these components, but the simplest model seems to fit the data without needing any such extensions. We also observe the atoms and light which comprise the more prosaic kinds of matter we observe in our day-to-day lives, which make up only a few percent of the Universe.

All together, the sum of the densities of these components are just enough to make the curvature of the Universe exactly flat through Einstein’s General Relativity and its famous relationship between the amount of stuff (mass) and the geometry of space-time. Furthermore, we can measure the way the matter in the Universe is distributed as a function of the length scale of the structures involved. All of these are consistent with the predictions of the famous or infamous theory of cosmic inflation, which expanded the Universe when it was much less than one second old by factors of more than 1020. This made the Universe appear flat (think of zooming into a curved surface) and expanded the tiny random fluctuations of quantum mechanics so quickly and so much that they eventually became the galaxies and clusters of galaxies we observe today. (Unfortunately, we still haven’t observed the long-awaited primordial B-mode polarization that would be a somewhat direct signature of inflation, although the combination of data from Planck and BICEP2/Keck give the strongest constraint to date.)

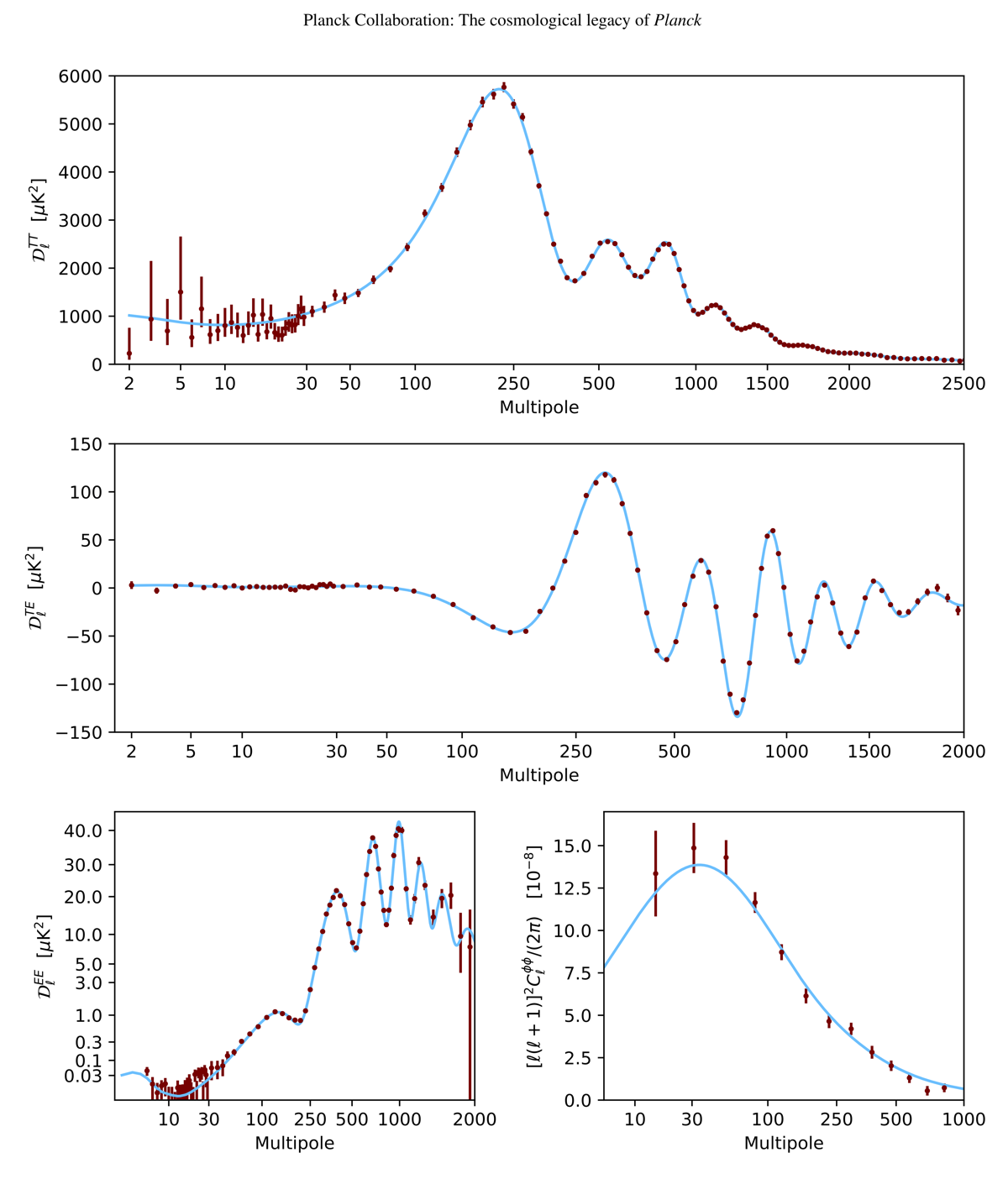

Most of these results are encoded in a function called the CMB power spectrum, something I’ve shown here on the blog a few times before, but I never tire of the beautiful agreement between theory and experiment, so I’ll do it again:

(The figure is from the Planck “legacy” paper; more details are in others in the 2018 series, especially the Planck “cosmological parameters” paper.) The top panel gives the power spectrum for the Planck temperature data, the second panel the cross-correlation between temperature and the so-called E-mode polarization, the left bottom panel the polarization-only spectrum, and the right bottom the spectrum from the gravitational lensing of CMB photons due to matter along the line of sight. (There are also spectra for the B mode of polarization, but Planck cannot distinguish these from zero.) The points are “one sigma” error bars, and the blue curve gives the best fit model.

As an important aside, these spectra per se are not used to determine the cosmological parameters; rather, we use a Bayesian procedure to calculate the likelihood of the parameters directly from the data. On small scales (corresponding to 𝓁>30 since 𝓁 is related to the inverse of an angular distance), estimates of spectra from individual detectors are used as an approximation to the proper Bayesian formula; on large scales (𝓁<30) we use a more complicated likelihood function, calculated somewhat differently for data from Planck’s High- and Low-frequency instruments, which captures more of the details of the full Bayesian procedure (although, as noted above, we don’t use all possible combinations of polarization and temperature data to avoid contamination by foregrounds and unaccounted-for sources of noise).

Of course, not all cosmological data, from Planck and elsewhere, seem to agree completely with the theory. Perhaps most famously, local measurements of how fast the Universe is expanding today — the Hubble constant — give a value of H0 = (73.52 ± 1.62) km/s/Mpc (the units give how much faster something is moving away from us in km/s as they get further away, measured in megaparsecs (Mpc); whereas Planck (which infers the value within a constrained model) gives (67.27 ± 0.60) km/s/Mpc . This is a pretty significant discrepancy and, unfortunately, it seems difficult to find an interesting cosmological effect that could be responsible for these differences. Rather, we are forced to expect that it is due to one or more of the experiments having some unaccounted-for source of error.

The term of art for these discrepancies is “tension” and indeed there are a few other “tensions” between Planck and other datasets, as well as within the Planck data itself: weak gravitational lensing measurements of the distortion of light rays due to the clustering of matter in the relatively nearby Universe show evidence for slightly weaker clustering than that inferred from Planck data. There are tensions even within Planck, when we measure the same quantities by different means (including things related to similar gravitational lensing effects). But, just as “half of all three-sigma results are wrong”, we expect that we’ve mis- or under-estimated (or to quote the no-longer-in-the-running-for-the-worst president ever, “misunderestimated”) our errors much or all of the time and should really learn to expect this sort of thing. Some may turn out to be real, but many will be statistical flukes or systematic experimental errors.

(If you were looking a briefer but more technical fly-through the Planck results — from someone not on the Planck team — check out Renee Hlozek’s tweetstorm.)

Planck 2018: lessons learned

So, Planck has more or less lived up to its advanced billing as providing definitive measurements of the cosmological parameters, while still leaving enough “tensions” and other open questions to keep us cosmologists working for decades to come (we are already planning the next generation of ground-based telescopes and satellites for measuring the CMB).

But did we do things in the best possible way? Almost certainly not. My colleague (and former grad student!) Joe Zuntz has pointed out that we don’t use any explicit “blinding” in our statistical analysis. The point is to avoid our own biases when doing an analysis: you don’t want to stop looking for sources of error when you agree with the model you thought would be true. This works really well when you can enumerate all of your sources of error and then simulate them. In practice, most collaborations (such as the Polarbear team with whom I also work) choose to un-blind some results exactly to be able to find such sources of error, and indeed this is the motivation behind the scores of “null tests” that we run on different combinations of Planck data. We discuss this a little in an appendix of the “legacy” paper — null tests are important, but we have often found that a fully blind procedure isn’t powerful enough to find all sources of error, and in many cases (including some motivated by external scientists looking at Planck data) it was exactly low-level discrepancies within the processed results that have led us to new systematic effects. A more fully-blind procedure would be preferable, of course, but I hope this is a case of the great being the enemy of the good (or good enough). I suspect that those next-generation CMB experiments will incorporate blinding from the beginning.

Further, although we have released a lot of software and data to the community, it would be very difficult to reproduce all of our results. Nowadays, experiments are moving toward a fully open-source model, where all the software is publicly available (in Planck, not all of our analysis software was available to other members of the collaboration, much less to the community at large). This does impose an extra burden on the scientists, but it is probably worth the effort, and again, needs to be built into the collaboration’s policies from the start.

That’s the science and methodology. But Planck is also important as having been one of the first of what is now pretty standard in astrophysics: a collaboration of many hundreds of scientists (and many hundreds more of engineers, administrators, and others without whom Planck would not have been possible). In the end, we persisted, and persevered, and did some great science. But I learned that scientists need to learn to be better at communicating, both from the top of the organisation down, and from the “bottom” (I hesitate to use that word, since that is where much of the real work is done) up, especially when those lines of hoped-for communication are usually between different labs or Universities, very often between different countries. Physicists, I have learned, can be pretty bad at managing — and at being managed. This isn’t a great combination, and I say this as a middle-manager in the Planck organisation, very much guilty on both fronts.